Hello,

I’m encountering an issue when conducting simulations with multiple signals—whether it involves using one RF signal with another as interference, or managing GNSS Upper L-Band and Lower L-Band signals on separate GPUs, especially when the combined Sampling Rate exceeds 25 MSps. This leads to a Streaming buffer underrun problem.

Below are the specifications of the PC tower being used:

- Skydel version: 23.5.1,

- Operating System: Ubuntu 20.04 LTS,

- Output configuration: X300 (1G ETH),

- GPUs: RTX 4000 (6144 CUDA Cores) and RTX A4000 (2304 CUDA Cores). Performance tests for both setups yielded satisfactory results:

- for Two frequencies (L1C and L2C) a score of 10.85

- For interference (L1C/A + Galileo E1 and interference on L1C/A) a score of 8.88

I’ve reviewed past discussions on similar issues:

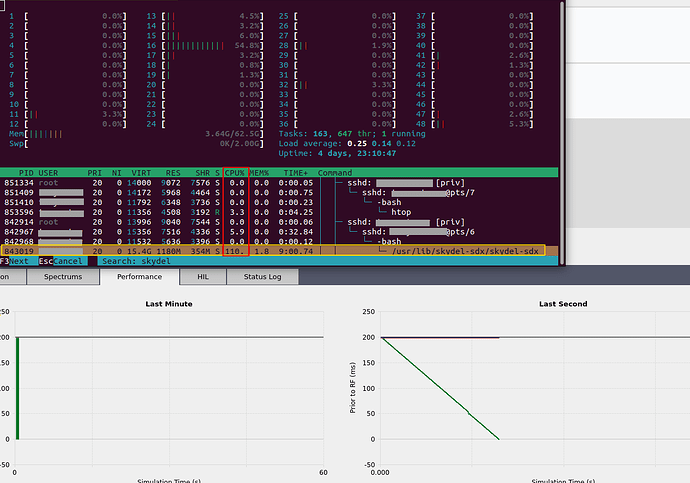

Upon checking the multiple CPU core performance, I found no issues: :

Despite adjusting the USRP Frame Size to 8000 Bytes, MTU to 9014 Bytes, Engine Latency to 500ms, and Streaming buffer to 1000ms, the issue persists.

I am unable to upload the performance.csv file to this discussion in order to show further details.

Any idea on how I can resolve this issue ?